The Foundation of Trust: An Introduction to Data Quality Management

In the modern data-driven economy, the old adage "garbage in, garbage out" has never been truer. The practice of ensuring that an organization's data is accurate, consistent, and fit for purpose is the domain of Data Quality Management (DQM). This is a comprehensive discipline that involves a combination of technology, processes, and people to continuously measure, monitor, and improve the quality of an enterprise's data assets. It is the foundational layer upon which all successful data analytics, business intelligence, and artificial intelligence initiatives are built. The growing recognition of data as a critical corporate asset is driving significant market growth, with the industry estimated to grow to a valuation of USD 10.69 billion by 2035, expanding at a solid compound annual growth rate of 9.22% during the 2025-2035 forecast period.

At its core, data quality management is about assessing data against a set of key dimensions. These typically include accuracy (is the data correct?), completeness (is any data missing?), consistency (is the data uniform across different systems?), timeliness (is the data up-to-date?), and validity (does the data conform to a defined format or set of rules?). For example, a customer record might have an accuracy issue if the address is misspelled, a completeness issue if the phone number is missing, and a consistency issue if the customer's name is spelled differently in the sales system and the marketing system. DQM provides the tools and methodologies to systematically identify and remediate these types of issues across an organization's entire data landscape.

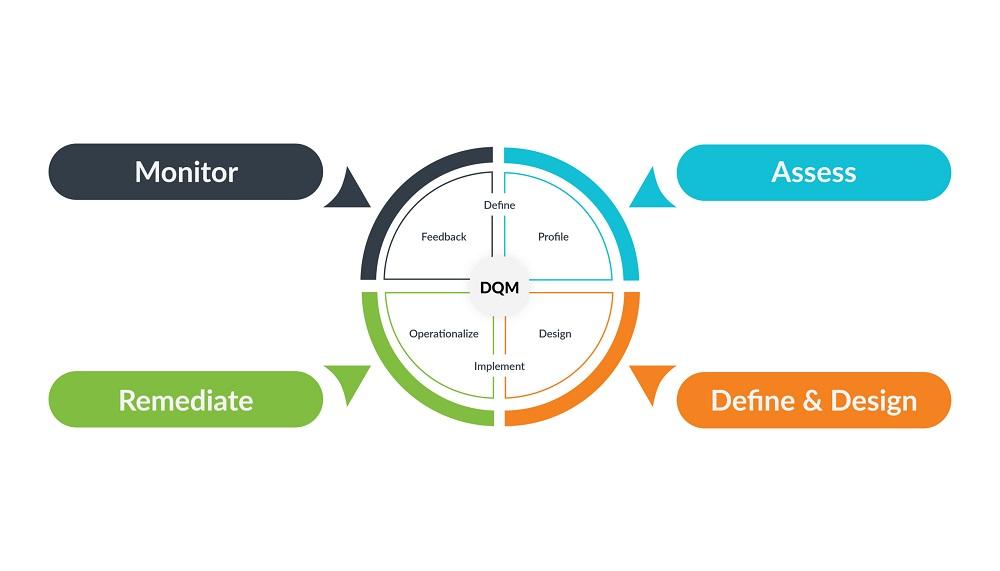

The process of data quality management is a continuous cycle, not a one-time project. It begins with data profiling, where specialized software is used to scan data sources to discover their structure, content, and the quality issues within them. This is followed by data cleansing and standardization, where rules are applied to correct errors, remove duplicates, and format the data in a consistent way. A key part of the process is data monitoring, where data quality rules are applied continuously to new data as it enters a system, and alerts are generated when any data fails to meet the defined quality standards. This proactive approach helps to prevent bad data from entering the system in the first place, which is far more efficient than cleaning it up later.

Ultimately, the goal of data quality management is to build a foundation of trusted data that the entire organization can rely on to make critical business decisions. Without a high level of data quality, business intelligence reports can be misleading, marketing campaigns can be sent to the wrong people, and machine learning models can produce inaccurate predictions. By establishing a formal DQM program, organizations can increase their operational efficiency, reduce the risks associated with poor data, and, most importantly, unlock the full strategic value of their data as a corporate asset, providing a clear and compelling return on investment for the technology and processes involved.

Explore Our Latest Trending Reports:

Online Trading Platform Market